Traditionally servers are configured to use a CPU for processing - components which are built to handle a wide range of computing requirements and work perfectly for traditional applications such as email servers and storage servers. There are however a growing number of applications which benefit enormously from using a graphics card for processing.

A GPU server is a server configured with graphics cards which are built to harness the raw processing power of GPUs. Through utilising an offloading process, the CPU is able to send certain tasks to the GPUs and therefore greatly increasing server performance.

GPUs are designed to deal with anything thrown at them, thriving in the most computationally intense applications.

GPU dedicated servers are often used for fast 3D processing, error-free number crunching and accurate floating-point arithmetic where the design of graphical processing units allows them to run compute considerably faster than a CPU could. While they often operate at slower clock speeds than CPUs, GPUs can possess thousands of cores, allowing them to harness thousands of individual threads at the same time known as parallel computing.

In computationally intensive environments offloading tasks to a GPU is an excellent way minimise pressure on the CPU, mitigating any potential performance bottlenecks.

A significant number of the Big Data tasks which create business value involve constantly repeating the same operations. The huge number of cores available in GPU servers are conducive to this type of work. It is split up between processors to get through voluminous data sets at a faster rate.

GPU servers tend to use less energy in comparison to CPU-only based servers, providing long term reduction in TCO.

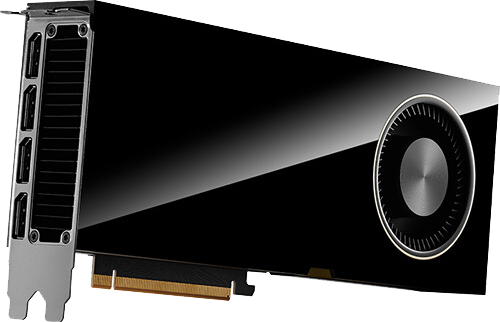

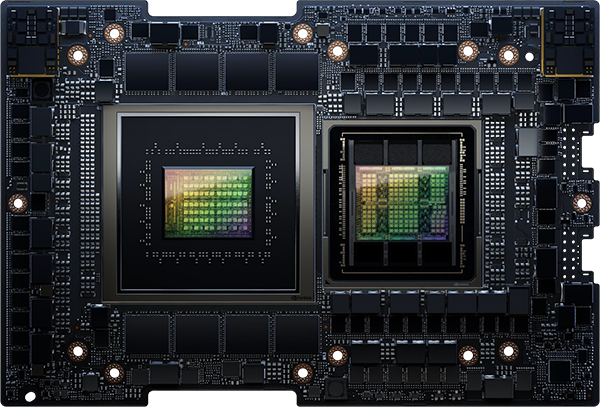

Broadberry GPU optimised servers feature up to 4TB of RAM and can be powered by the latest Intel Xeon Scalable processors or AMD EPYC series processors. With a massive range of GPU options available, Broadberry GPU dense servers can be configured with up to 10x NVIDIA Tesla GPU cards, the worlds leading platform for accelerating datacentres. Deployed by many of the planets largest supercomputing centres and enterprises, it utilises GPU accelerators, interconnect technologies, accelerated computing systems, development tools and applications to allow for faster scientific discoveries and big data insights.

At the centre of the NVIDIA Tesla platform is the hugely parallel GPU accelerators that deliver significantly higher throughput for compute-intensive workloads, without a subsequent rise in physical footprint of data centres or an increase in power consumption.

Broadberry GPU servers are built around industry-leading GPU-optimised server chassis which have been designed and rigorously tested to run up to 10x GPUs for massively parallel computing whilst keeping cool due to the latest advances in server cooling technology.

Our online configurator allows you to configure your GPU optimised server with a wide range of powerful processors, RAM options as well as SSD, NVMe or HDD storage options.

GPUs excel at performing massively parallel operations very quickly up to 10x quicker than their counterpart CPUs can. As a GPU is designed to perform parallel operations on multiple sets of data, they can quickly render high-resolution images and 3D video concurrently, analyse big sets of data faster or train your AI application. NVIDIA Tesla based GPU servers are also often used for non-graphical tasks, including scientific computation and machine learning.

The amount of GPUs that a GPU optimised server could be configured with used to be limited by three main factors the number of lanes on the CPU, physical space in the chassis, and the power that the systems power supply could provide. Working closely with our partners, Broadberrys GPU server range utilises the latest technical advances in the industry to allow up to 10x double with GPU cards in a system, or 20x single width cards.

Artificial Intelligence Server for HMRC Fraud Detection

Artificial Intelligence Server for HMRC Fraud Detection  Ultra-Performance AI Server for Samsung

Ultra-Performance AI Server for Samsung

Our Rigorous Testing

Our Rigorous Testing Un-Equaled Flexibility

Un-Equaled Flexibility

Call Our UK Sales Team Now

Call Our UK Sales Team Now